Avionics systems using artificial intelligence are being developed for eVTOL aircraft, but general aviation piston aircraft will be the first users. Avidyne and Daedalean formed a partnership to develop the PilotEye system in 2020. Avidyne is aiming to gain FAA certification for the system this year with concurrent validation by EASA. Schwinn (President and founder of avionics company Avidyne) expects to have finalized systems by the middle of the year. Development, validation and certification activities are ongoing in the STC program (Supplemental Type Certificate program underway at FAA and EASA).

Visual acquisition

Avidyne was founded 27 years ago to bring big glass cockpit displays to general aviation cockpits. PilotEye functions will integrate with any standards-based traffic display, allowing the pilot to use an iPad to zoom in on traffic. There will be enhanced features when installed with Avidyne displays.

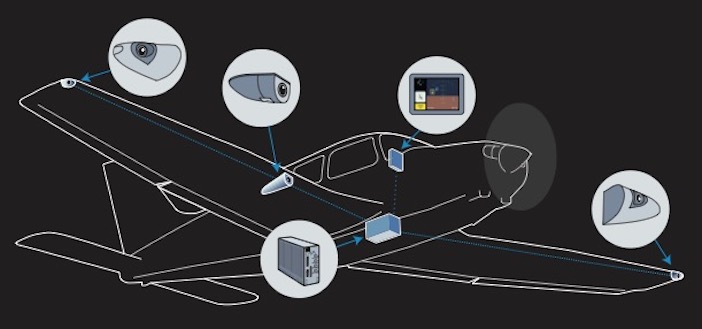

PilotEye is a system that can detect aircraft, drones, and obstacles. PilotEye can detect a Cessna 172 at 2 miles (3.2km) and a Group 1 drone (20 lbs, 9kg). Avidyne installs traditional avionics hardware and Daedalean provides neural network software for flight test programs.

PilotEye is a real-time, critical application that uses neural networks to analyze engine data. Avidyne has flown hundreds of hours of flight tests with PilotEye and done thousands of hours of simulation. The forward-facing camera has a 60˚ field of view and the two side-facing cameras have 80˚ fields of view, producing a 220˚ panorama. The system will have three cameras initially and an optional fourth camera later, which helicopter operators may point down to find helipads or potential emergency landing locations.

Neural networks

Daedalean has been developing neural network technology for aviation since 2016, mainly as flight control systems for autonomous eVTOL aircraft. It provides an evaluation kit of its computer vision based situational awareness system, along with drawings and documentation, so airframe, avionics companies and large fleet operators can install it on their own flight test aircraft. The two-camera evaluation kit provides visual positioning and navigation, traffic detection and visual landing guidance portrayed on a tablet computer in real time. Installation is not easy and involves more than duct tape to ensure it is safe for flight. End users can buy or rent the evaluation kit or partner with Daedalean long-term in developing advanced situational awareness systems.

Daedalean is developing a Level 1 AI/Machine learning system to assess the performance of computer vision technology in real world scenarios. The equipment is a Level 1 AI/Machine learning system, which provides human assistance, Level 2 is for human/machine collaboration and Level 3 is a machine able to perform decisions and actions fully independently. Daedalean has collected 500 hours of flight test video recordings in rented GA aircraft and helicopters for its situational awareness system, and has gathered 1.2 million still images taken during 7,000 encounters between the data gathering aircraft and another aircraft providing the target. The code is then frozen and released to partners using Daedalean evaluation kits, and feedback from these users guides the next release. The aim is to eventually have the system interfaced with flight controls to avoid hazards such as obstacles and terrain, and to communicate with air traffic control and other Daedalean equipped aircraft.

Certification Procedure

Daedalean is working with regulators to develop an engineering process to be applied to AI and machine learning avionics during certification. The standard software development process follows a V-shaped method which confirms software conforms to requirements, standards and procedures. EASA and Daedalean have created a W-shaped process to guide certification efforts, with the middle of the W used to verify the learning process and ensure that the learning technique is correctly applied. The FAA has also evaluated whether visual-based AI landing assistance would backup other navigation systems during a GPS outage. Avionics supplier Honeywell has also partnered with Daedalean to develop and test avionics for autonomous takeoff and landing, as well as GPS-independent navigation and collision avoidance.

Honeywell Ventures is an investor in Daedalean. The Swiss company Honeywell has established a US office near Honeywell’s headquarters in Phoenix, USA. The FAA is also involved in working to bring AI and neural network machine learning to general aviation cockpits by funding R&D with US research agency MITRE. Software engineer Matt Pollack has been working on the digital copilot project that began in 2015. Flight testing of the first algorithms began in 2017 with a Cessna 172 and since then a total of 50 flight test hours have been conducted in light aircraft as well as in helicopters. The cognitive assistance provided by the digital co-pilot operates like Apple’s Siri or Amazon’s Alexa voice assistance do on the ground.

It aids a pilot’s cognition without replacing it and is enabled by automatic speech recognition and location awareness. The algorithms provide useful information with audio and visual notifications based on the context of what the pilot is trying to accomplish. The system can also provide a memory prompt.